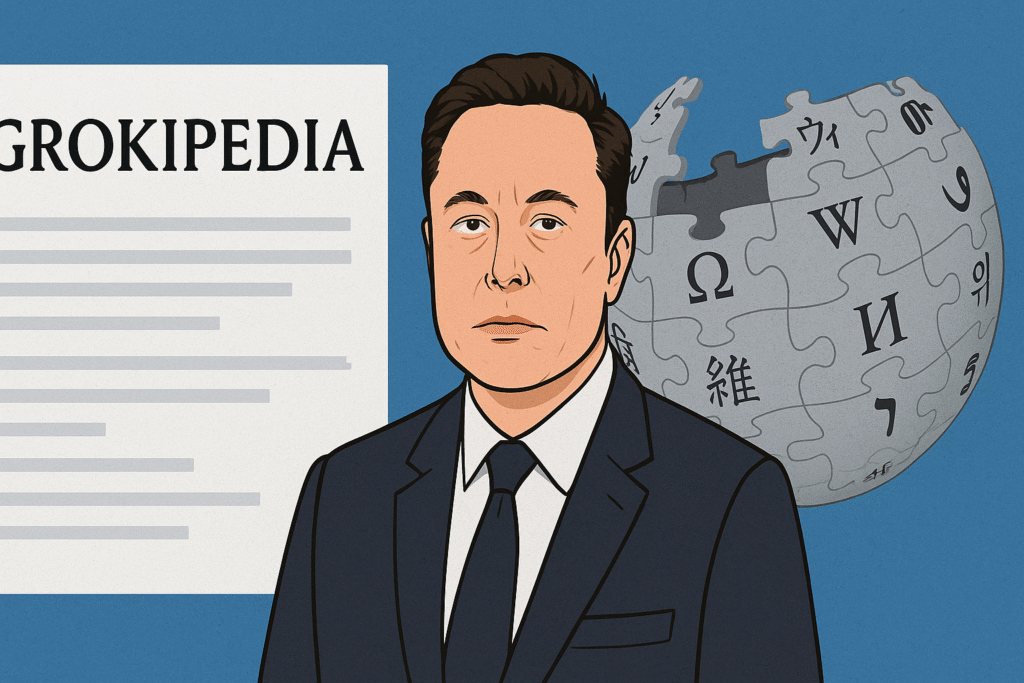

Elon Musk’s new AI-powered encyclopaedia promises to “purify” knowledge—but replacing many human editors with one algorithm raises fresh questions about bias and control.

Elon Musk recently launched Grokipedia, an online encyclopaedia that he presents as a corrective to what he calls Wikipedia’s left-wing bias.

Grokipedia is generated and curated by Grok, the AI chatbot integrated with Musk’s social network X; its backers say it will purge knowledge of ideological distortion.

Critics warn that algorithmic curation under a single owner may produce a different — and no less dangerous — form of editorial control.

What is Grokipedia?

Grokipedia is an AI-driven online encyclopaedia launched by Elon Musk’s xAI team at the end of October 2025.

Unlike Wikipedia — a two-decade-old, volunteer-edited project available in hundreds of languages — Grokipedia relies primarily on content generated and fact-checked by Grok, Musk’s own large language AI.

At launch Grokipedia presented itself as an alternative designed to remove what Musk calls “propaganda” from public reference material.

Why did Musk build it?

Musk has long criticised Wikipedia, accusing it of ideological bias and describing its work as driven by “woke” perspectives.

The creation of Grokipedia follows years of public complaints, efforts to influence donation behaviour and, after his acquisition of X in 2022, use of the platform to campaign against the Wikimedia model.

For Musk, the encyclopaedia is not merely a product: it is part of a broader ambition to move from human collaborative knowledge towards algorithmic, centrally governed knowledge.

How does Grokipedia differ from Wikipedia?

Authorship and editing. Wikipedia is edited by volunteers across the globe; discussions, edit histories and community governance are integral to its model. Grokipedia, by contrast, is produced and moderated by a single AI system, with human intervention limited and centrally controlled.

Scale and maturity. Wikipedia hosts many millions of articles across hundreds of languages and has been refined over more than twenty years. Grokipedia launched as v0.1 with several hundred thousand entries — a fraction of Wikipedia’s size and without the mature governance structures Wikipedia has developed.

Transparency and accountability. Wikipedia publishes revision histories, talk pages and references that allow readers to trace how an article evolved. Grokipedia’s editorial decisions and the training data or rules behind Grok are far less transparent, meaning users cannot easily trace why a passage reads as it does.

Why do scholars see red flags?

Scholars, technologists and Wikimedians have raised several objections:

- Bias and framing: AI systems reflect the data and incentives of their creators. Critics argue that Grokipedia will embody Musk’s perspectives and the biases present in its training sources.

- Hallucinations and factual errors: Large language models can produce plausible-sounding falsehoods. Relying on an AI as the principal writer or fact-checker risks introducing inaccuracies at scale.

- Centralisation of editorial power: Replacing a distributed volunteer community with a single, corporate-run system concentrates control over public reference material in the hands of a few.

- Intellectual-property and dependence: Early examinations suggested some Grokipedia entries mirrored material from existing sources, including Wikipedia itself, posing questions about sourcing and originality.

Are claims of ‘neutrality’ credible?

Musk asserts that AI “doesn’t care about ideology, it cares about accuracy.” Yet neutrality in technology is a contested notion: system design, training data and operational choices encode values and priorities.

Researchers note there is no neutral technology; even so-called objective algorithms reflect decisions made by their builders. Consequently, critics say Grokipedia’s neutrality claim is at best premature and at worst misleading.

What might this mean for public knowledge?

The arrival of Grokipedia signals a broader shift. If algorithm-driven encyclopaedias gain readership, public reference points may fragment.

Competing “truths” could coexist — each shaped by its proprietors’ priorities. For educators, students and the public, the result could be greater emphasis on source-awareness and cross-checking, but also more contestation about what counts as a reliable fact.

In practical terms: for now Wikipedia remains the more established, transparent and collaboratively governed resource. Grokipedia is nascent, ambitious and controversial — and its long-term impact remains uncertain.

Who benefits — and who loses?

Proponents argue that Grokipedia could correct perceived ideological blind spots and offer alternative framing for contested topics.

Opponents counter that the platform primarily benefits those who favour a strong, central arbiter of truth — and risks disenfranchising the many editors, historians and domain-experts who contribute to communal knowledge projects.

The central question is not merely which facts are published, but who gets to select and present them.

How to view Musk’s bid to own knowledge

The launch of Grokipedia could be a defining moment in the evolving politics of information. It sharpens a fundamental debate about how societies produce, curate and trust knowledge.

Is the future one of decentralised, peer-reviewed wisdom or of centralised, algorithmically curated truth?

For now, Grokipedia stands as an experiment in the latter. Its arrival should prompt readers, teachers and policy-makers to ask hard questions about transparency, bias and power — and to insist, as ever, that claims of neutrality be examined, not accepted at face value.